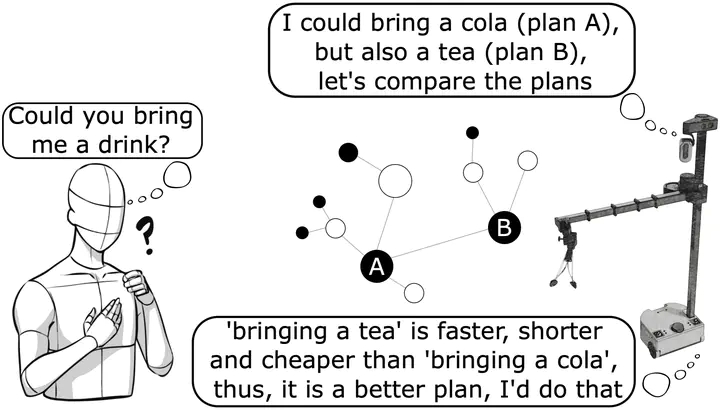

Ontological modeling and reasoning for comparison and contrastive explanation of robot plans

Abstract

This extended abstract focuses on an approach to modeling and reasoning about the comparison of competing plans, so that robots can later explain the divergent result. First, the need for a novel ontological model that empowers robots to formalize and reason about plan divergences is identified. Then, a new ontological theory is proposed to facilitate the classification of plans (e.g., the shortest, the safest, the closest to human preferences, etc.). Finally, the limitations of a baseline algorithm for ontology-based explanatory narration are examined, and a novel algorithm is introduced to leverage the divergent knowledge between plans, enabling the construction of contrastive narratives. An empirical evaluation is conducted to assess the quality of the explanations provided by the proposed algorithm, which outperforms the baseline method.